The accursed renderer, the unknowable server 2025-06-24

I find myself at an impasse with my programming endeavors. There's 2 main directions that I've more and more been trying to go for the past few years: making a proper videogame, and making websites. In both directions I have run into what seems like an impenetrable wall.

Both of these blockers are mostly caused by my lack of knowledge about the subject, and inability to find that information anywhere. Search engines have become totally useless since they have a tendency to just completely ignore half of what I searched for, and act as if I only typed the 1 most popular word of my query. So the only thing I can do is try to solve the problem myself.

I want to make everything myself for reasons that I might elaborate more on some day (dependency minimalism), but occasionally I consider just giving up and using some bloated library/engine/whatever to do things for me.

The renderer

In order to make a videogame, you need to render graphics. I love drawing pixels by writing colors into memory with my own code, but this is very costly so it's not practical for videogames, using the GPU is a must for most kinds of games.

For the longest time I have been blocked by the graphics API (OpenGL) itself because I just hate everything about it and don't want to interact with it, it makes me depressed and demotivated. That's still true to some extent, but now I'm mostly limited by my experience with renderer design.

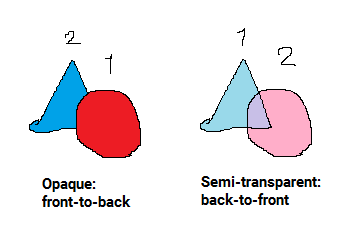

The biggest problem I have with rendering is sorting. If you're drawing opaque graphics, it's beneficial to draw the objects in front first, and objects on the back last. This way the GPU can utilize the depth buffer and optimize rendering since if the GPU detects that something was already drawn in front of the pixel it's trying to draw on, it doesn't have to render that pixel. It's not necessary to sort things like this, it can improve performance, but it may also be more valuable to batch objects together than it is to sort them perfectly.

However, if you're drawing semi-transparent objects such as a glowing balls of light, you need to draw the objects on the back first, and this is mandatory because otherwise the pixels won't blend correctly.

You may also want to do back-to-front if your graphics have anti-aliased smooth edges, those are semi-transparent pixels after all.

One way to approach sorting is to make a list of all entities visible on-screen, and then sort the list. However, what if you have an enemy that is made up of multiple sprites?

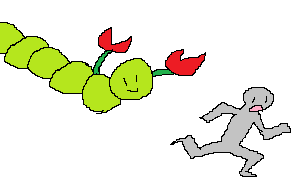

You can't sort Mr. Centipede here because he's made up of multiple parts that are in different locations. So what exactly DO you sort? What is the thing that you put into the sortable array? You need a way to talk about each sprite in isolation. So maybe you can just create a list of "sprite" objects and sort those?

But what happens if you want to draw a dynamic polygon shape, such as a line/path/circle? You have to dynamically fill in a buffer with vertices, which isn't difficult in itself, but how do you sort this dynamic batch of vertices with all the other sprites and other dynamic batches of vertices on the scene? Also, what if you want to change shaders or textures? You don't need to just talk about the sprite, you also need to know what shaders and buffers (and location in the buffer) and textures it's using. What kind of amalgamation do you need to create to represent the sortable objects so that you can sort them?

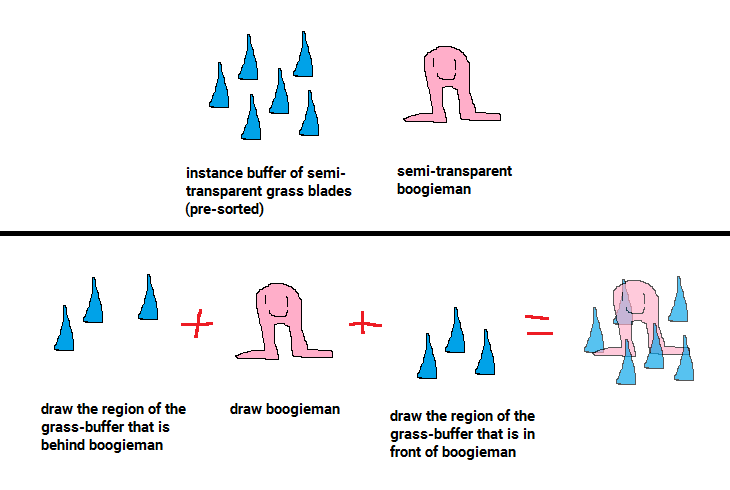

It still gets worse. What if I already have a cached instance buffer filled with grass blades and miscellaneous foliage or furniture sprites? How do I sort that with all the other objects, especially if the grass and other objects are meant to be semi-transparent and thus need to be correctly sorted? Here's one of the many things that went through my mind:

Splicing and interleaving and sorting buffers like this just gets way too complicated to me since I don't have a lot of experience with many parts of GPU rendering and graphics APIs.

When working on Scavgame, I came to the conclusion that I don't have what it takes to make the renderer for such a game yet. I have a feeling that there's a relatively simple and elegant solution to all of this, I have some ideas and I've tried to design a solution, but it's too overwhelming for me at the moment.

My current idea is to pretend to fill the final GPU buffers, but actually just write the data into a special fake buffer and store it's position along with shader/texture/buffer IDs/positions and the object's depth. This way you can create generic and minimal representation for everything and sort them all together, while also retaining arbitrary amount of information for any given object since the data for the GPU was already created. All you need is to move the data into real GPU buffers and do whatever context switches and draw calls you need in-between.

I will put Scavgame away for now and work on something that can make do with simpler graphics. Possibly Volve. That said, I'm too demoralized by all this rendering stuff so I have been struggling to find the motivation to work on a renderer for Volve, especially since I've already made 2 attempts at it in the past.

As a side note, certain kinds of graphics require you to draw multiple objects. For example if you want to draw a lamp, you maybe want to draw the light along with all the transparent objects, but the lamp itself with opaque objects. There's also considerations like multiple render targets (for glowing luminescent pixels or whatever) which complicates things further.

As a final note, there is the option of "order-independent transparency". It is basically required for 3D because sorting everything in 3D is too complicated (and in some cases impossible), but it's also inherently restricting, notably blending effects don't work well with it, and also more complicated and expensive.

The server

In order to make a website, you will need a server.

Almost everyone who writes backend server code will do it within some kind of framework like Apache or Node.js. Therefore any and all search engine results for making a server from scratch are completely useless because all you'll get back is "HOW TO DO [SIMPLE THING] WITH NODE.JS EXPRESS™ IN 5 MINUTES".

Making a server from scratch is actually pretty simple, but an "I made a socket server haha" kind of server has nothing in common with a real, abuse-proof, high bandwidth server. You can follow a socket programming tutorial and easily make a server, but what happens if I open 5,000,000 sockets into your server? Assuming your server didn't crash due to lack of memory, what if I then try to upload a 1GB file from all of them simultaneously? You can't exactly allocate 5 petabytes of memory or create a 5 petabyte file. What if I open as many sockets as your server allows and then do nothing with them, but I also never close them? What if each socket tries to open a different 1MB file? What if I tell the server that I'm about to upload a 20 GB file, but I only send 1 byte per second?

You can probably come up with relatively simple solutions to those kinds of problems (at least in isolation), but it becomes a lot more complicated if you want to make an efficient server that can handle lots of clients simultaneously. You need to be able to manage memory in a way that is efficient and causes the least amount of problems when the server starts reaching it's limit in traffic.

How much memory do servers typically use per client requesting pages? How many clients can typically upload resources at the same time? How much of the system's memory is used by the I/O and how much by the server's actual functionality such as database and file processing? How many simultaneous clients can a typical server handle? What can you expect to be able to do, given certain server hardware specifications?

Maybe I'm just thinking about it too hard, but there's way too many unknowns. Maybe I'm doing something that's dumb and way worse than a typical server. Maybe I'm making things too hard for myself by trying to accommodate way more clients than the server hardware is even capable of. Maybe when I make a website it'll be blazingly fast and overkill and can serve 60 billion clients on a $5 shoebox server. Maybe it will crash and burn immediately because I made some fundamentally wrong assumptions about how I should manage memory and what kind of things I should prioritize.

I don't want to spend a ton of effort making an elaborate and efficient magnum opus server if it's going to be completely wrong in some fundamental way. I feel like the only way to know what to do is trial and error, and even that doesn't really work, it's extremely difficult to measure specific conditions because those conditions depend on a ton of people trying to use and/or abuse the server at the same time, and even if you get those conditions, the server is probably halfway across the planet.

To give a more practical example, how should you distribute memory for clients/sockets so that they can send and receive data? If a client wants to upload a file, do you just try to allocate memory for it and then fail if there isn't enough? Do you have some kind of fixed size, pre-allocated small data buffer and stream the html form data onto the disk, and then process the form data later? Does each socket have it's own I/O buffer, or do you have a smaller number of buffers, give them to sockets that are actively transferring data, and take it away from sockets that are idle? What about I/O in the other direction if you need to generate a unique document for a particular client?

Did you know that when you open a website, your browser will open about 5 sockets to the server, and keeps them open even if you don't do anything? So basically, if your server has an array of 1000 socket objects or whatever for handling I/O, 200 people having a tab to your webpage open on their browser will cause your server to be unable to respond to anyone else at all. Obviously you can have a lot more than 1000, but how many exactly, and how much memory can each one keep to themselves?

I'm very concerned about this so I'm trying to think of ways to efficiently and flexibly distribute memory and allow as many sockets to be open simultaneously as possible, but I have absolutely no mental picture of where the bottlenecks in a server are.

As for what websites I actually want to make, the biggest one is my own imageboard, although it might be different from the typical imageboard so I'm not sure if I should even call it such. I've wanted to try making a better website for hosting videogame mods, but maybe that niche is being filled by other people now. There's a bunch of small "services" I'd like to make, like a pastebin clone. Also minigames like different kinds of online drawing canvases. I'd like to make a chat program similar-ish to discord, except with easier access and less evil. All of these may theoretically become very active, so I want a very good server that can handle a lot of traffic without me having to buy a bunch of extra servers or start decentralizing it.